Photographs here under Creative Commons license.

The plan

(sorry French readers, this one is better suited to English)

Participate from ENST, Paris, France in Mozilla 24, a multi-site, multi-continent, high-quality video conference operated from Keio University in Tokyo, Japan. Two simultaneous video streams (one from our conference room to Japan, the other one back) allow interactivity between the speakers in our room and audiences on the other sites.

The room setup

Video from Paris to Japan has been sent using a laptop running FreeBSD 6, with a Firewire card and a 100 Mbps ethernet port. The PC receives the DV video from a professional camera on the Firewire port and reemits it, encapsulated, on the ethernet port. Here’s a picture of the setup:

The blue cable is the 100 Mbps ethernet cable. The other cable is the firewire cable, plugged on the PCMCIA adapter.

Video from Japan was received by IP on a laptop running a lesser-known operating system I never heard of until today. The laptop receives IP packets from the local ethernet, sends video on its VGA port and audio on, surprise, its audio port. The VGA port (at left) is plugged into the room’s video projector; the audio port (at right) is plugged into the room’s audio amplifier. The blue cable is the ethernet cable:

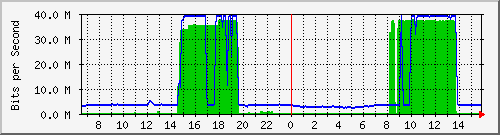

Both channels (outgoing and incoming) were DV video (your digital camcorder’s format) encapsulated by a free program called DVTS, in RTP (Real-time Transport Protocol), with a throughput of over 30 Mbps each way. This yielded the following nice MRTG traffic graph on the router port during the event, where you can see Mozilla 24 traffic from 8:00 to 13:45 as well as the previous day trials from 14:30 to 19:45. Green is incoming, blue is outgoing:

Local network setup

One lesson we learned was that simultaneously sending 30 Mbps both ways requires careful cabling if you want to keep packet loss down to a reasonable rate. That may go without saying, but sometimes obvious things are worth repeating. Cabling and switches that work perfectly well for everyday, mundane use can turn out to be barely usable under high load. During the trials, we had up to 5-10% packet loss on the incoming video, according to DVTS statistics. Video and sound were still usable but the sound was noticeably crippled by bleeps; it sounded the same as when you read a bad DV tape. The irony is that the loss occurred on the last 50 meters, after the video had traveled unimpeded halfway round the globe, from Japan to Europe through the US. It’s also interesting to note that we realized where the problem came from when seeing that the stream as received in our offices (closer to the network backbone and with recent and much shorter cabling) gave a perfect result. This was only possible because the stream was received as IP multicast, allowing multiple receivers on the same incoming stream.

To investigate the packet loss, we had to test several hardware permutations: with and without a local switch; both channels on the same or on separate wall outlets; PC directly connected to the wall outlet.

It turns out that some switches are better than others to get the most out of less-than-perfect cabling. The laptops ethernet ports were as good as the best switch we had, so we ended up plugging the DVTS PCs directly on the wall outlets. Putting the video channels on separate cables made a big difference in terms of packet loss, which we finally stabilized under 0.1%. Largely good enough for a glitch-free transmission.

Local multicast setup

Having a functional multicast local area network does not happen totally by chance. ENST is a partner in the Opera Oberta program, a live opera broadcast from Barcelona, transmitted by multicast IP. Until a few years back, multicast functionality in routers and switches was buggy to the point of being unusable for high throughput streams. Low-level, widely-deployed protocols like IGMP are nowadays correctly implemented in most enterprise-grade equipment. But implementations of higher-level protocols such as PIM are not always quite there and can require tedious tuning, depending on your router software.

Wide-area network setup

Securing a reliable and high-quality path between France and Japan required a bit of traceroute‘ing and planning by some of the involved network providers: RAP (Paris), Renater (France), GEANT2 (Europe), Abilene (USA) and WIDE (Japan). It also required some setup at ENST on our edge routers, to make sure that all traffic to the video endpoint in Keio University (whether we decided to send the video as unicast or multicast) would exit our site through RAP and the research networks listed above. We just had to put two static routes to our RAP interface for the video subnet ranges at Keio University.

Sending the video as multicast was abandoned due to multicast route propagation problems “somewhere”, preventing the stream from being seen in Japan. On the other hand, receiving the multicast stream from Japan worked just fine. Keio University had multiple correspondents to whom they had to send the final, edited, 30 Mbps video stream. Multicast was clearly a net gain here, permitting huge bandwidth savings on their side by sending a single copy of the 30 Mbps stream.

The conference

Everything went as planned. We experienced rare glitches and, once, a total freeze for half a second (just enough to keep us out of panic mode, my heart just lost one beat), but overall the video and sound as received in Paris were very high quality and smooth, just as if it came from the room next to us. Here’s a picture of the room with some of the speakers, from left to right: Tristan Nitot (Mozilla Europe), François Bancilhon (Mandriva), Charles Schulz (OpenOffice.org), Pierre Beaudouin (Wikimedia France). The real-time edited video from Japan (showing some of the other sites) is projected in their back:

Looking at the Paris video looped back to us (top left on the above picture), I evaluated the video roundtrip time to about 1 second; a fair amount of that is probably due to buffering in the camera, the video editing equipment at Keio, the video player and the video projector, since the ping time from France to Japan is only about 300 ms (it didn’t occur to me until now — too late! — that we could have tested a local loopback to evaluate the delay due to buffering by itself).

I must say that I was quite impressed, dare I say moved, by the result. Seeing talented people from all over the world speak with each other in a public conference transmitted with crystal-clear pictures through thousands of kilometers of optical fiber doesn’t happen every day! But 10 years from now, it will probably be commonplace, once we all get fiber to our bedroom…

The people

There were lots of people involved in this top-notch technical setup: my colleagues Jorge, Yves and Patrick, staff from RAP and Renater who helped us to get started on the tests, Laurent, a French DVTS guru who gave a lot of useful advice, the people from Cerimes who handled video and audio takes during the conference, the people at Keio University who set up the hardest, central part of this planetary event; and last but not least, Akira Kanai from WIDE did a tremendous amount of work in collaboration with Keio, installing and thoroughly testing the DVTS computers days before the conference, and he operated them during the conference.

Many thanks must also go to the fine communication staff folks girls attachées from Renater and Mozilla Europe, who provided moral support and extra-nice goodies! My kids loved the tattoos and went positively crazy with the Firefox stickers… they’re all over their toy box now…

And thanks to Geneviève for taking care of the kids by herself (not a small feat) while I was (not so far) away from home!

Hello, nice article.

By the way, I see that you take a lot of pictures of the event. Do you plan to put them somewhere on the web ?

Philippe Antoine: of course, just follow the link at the top of this post, or here.

good text thank you