I have recently converted my self-hosted FreeBSD jails (including this very blog) to the VNET architecture.

A few words about VNET

The purpose of this post is not to explain jails, or VNET, but to provide examples for migration from the traditional jail networking environment (in my case, using ezjail), to the VNET architecture. There are numerous documents online for jail environments based on iocage, but not that much about ezjail-based ones.

Up to VNET, networking in jails had severe limitations on addressing, in particular limitations on the loopback interfaces (::1 and 127.0.1) and usage of IP aliases, which caused numerous configuration headaches. This was due to the jails sharing network interfaces and the full networking stack with the host. It was possible to alleviate some of this with multiple routing tables (setfib & al), but it was still limited.

VNET allows the jails to run networking stacks totally separated from the host’s, like it would in a fully virtualized guest. As a consequence, it allows running virtual routers with specific firewalls filters to better organize and isolate jail networking.

VNET basically works by moving network interfaces to the guest jails, in a separate instance of the network stack, hiding them from the host environment. This is done at jail startup, but it can also be done dynamically to a running jail with:

ifconfig <interface> vnet <jail_id>VNET works on any kind of interface: physical or virtual. It is thus perfectly possible to assign a physical interface, or a VLAN tagged interface, etc, to a jail.

Enabling VNET

First, we need to enable VNET in the kernel. From FreeBSD 12, the default kernel has VNET already, so there is nothing to do, unless you have a custom kernel. On FreeBSD 11, you need to recompile a kernel after adding the following line:

options VIMAGE # Subsystem virtualization, e.g. VNET

VNET and ezjail

What to do next with ezjail?

ezjail‘s configuration files are stored in /usr/local/etc/ezjail, one file per jail, named after the jail’s name. ezjail uses environment variables based on the former jail configuration variables stored in /etc/rc.conf. Under the hood, the system converts these lines to the new jail syntax, .conf files stored in /var/run.

The line that configures networking looks like the following (may be wrapped on your screen):

export jail_jailname_ip="re0|192.168.0.17,re0|2a01:e34:ec2a:94a0::11,lo0|127.0.0.17"To convert this configuration to VNET, we have to:

- disable the traditional jail networking system: this done by providing an empty value for the above line

- enable VNET for the jail

- specify the VNET interface(s) the jail is going to use

Which is done using the following lines:

export jail_jailname_ip="" export jail_jailname_vnet_enable="YES" export jail_jailname_vnet_interface="epair17b"

Note that we don’t specify IP addresses or the loopback interface anymore. Configuration will be done by the jail itself, possibly in the regular /etc/rc.conf way:

ifconfig_epair17b="192.168.0.17/24"

ifconfig_epair17b_ipv6="2a01:e34:ec2a:94a0::11/64"

We still have to create the interface the jail is going to use, here epair17b. I chose the epair/if_bridge architecture as it seemed the most flexible and easier to get a grip of, but it is also possible to use netgraph-based interfaces, or anything other the system supports.

epair interfaces are 2 virtual network interfaces linked with a virtual crossover cable. if_bridge is a bridge interface which switches traffic between the interfaces you attach to it. By combining both and adding routers, you can create any virtual network architecture.

To prepare the interfaces,

ifconfig epair17

creates two interfaces, epair17a and epair17b.

epair17b will be given to the jail; epair17a will stay on the host, and will have to get connectivity somehow. This is typically done by making it a bridge member.

epair17a may or may not have an IP address assigned to it (it does not need one if it is only used for bridging), but it needs to be up:

ifconfig epair17a up

We also need to add one of the interfaces to a bridge, so it gets connectivity to the rest of the network:

ifconfig bridge0 createupifconfig bridge0 addm epair17a

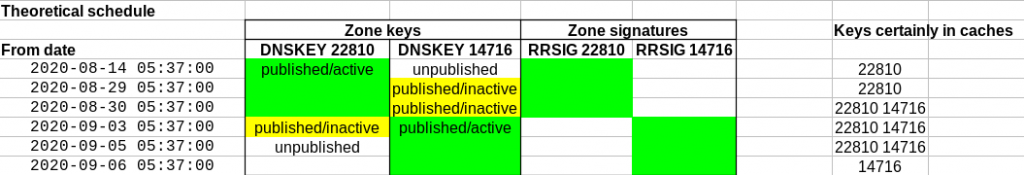

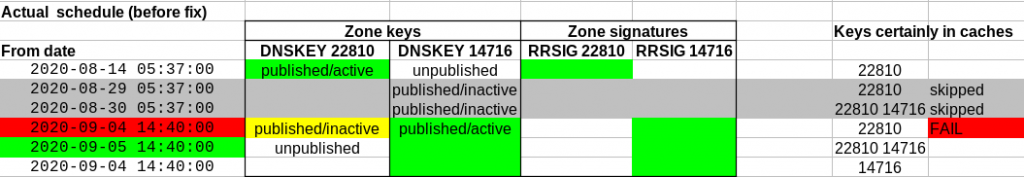

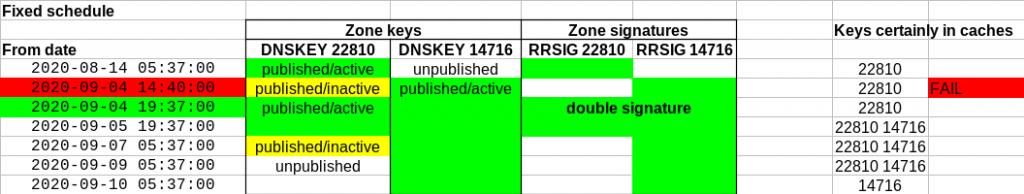

To make it easier to understand, I made a view images showing possible architectures.

First, example of a basic configuration where all the jails are configured on the same local network as the host through bridge0, mimicking the traditional jail networking.

Here, the jails are organized on two separate subnetworks, with Host possibly providing IP routing and firewalling.

Lastly, on Figure 3, another architecture where the first group of guests, Guest 1 and Guest 2, is directly configured on the local network, whereas Guest 4 and Guest 5 are connected through virtual router Guest 3. For example, this can be used in a setting where Guest 1 and Guest 2 provide the front-end to a service, and Guest 3 and Guest 4 provide the backend (databases, etc). Guest 4 and Guest 5 don’t even need full connectivity to the Internet, this can be enforced with firewall rules on Host or Guest 3.

Making the configuration persistent

The above commands were meant to explain the workings of the setup, but they are ephemeral. The configurations need to be made persistent in the boot configuration of Host, for example in /etc/rc.conf:

cloned_interfaces="bridge0 bridge1 epair1 epair2 ... ifconfig_bridge0="up addm re0 addm epair1a addm epair2a ..." ifconfig_epair1a="up"

Note that the epair interfaces on the guests don’t need to be up from the host configuration. The guest startup code will manage this.

Using jib to create/destroy interfaces dynamically

The above static configuration has a small issue: VNET takes quite some time (dozens of seconds) to reassign an interface of a deleted jail to the host, making it invisible in the meantime. This means that a jail restart will fail for lack of the adequate interface.

To avoid this, and create persistent MAC addresses for the interface, which comes-in handy, there are scripts provided in /usr/share/examples/jails, jib (for epair/bridge-based interfaces) and jng (for netgraph-based interfaces).

We just need to install these scripts in /usr/local/sbin and make them executable.

cp /usr/share/examples/jails/jib /usr/local/sbinchmod a+rx /usr/local/sbin/jibcp /usr/share/examples/jails/jng /usr/local/sbinchmod a+rx /usr/local/sbin/jng

jib creates epair interfaces and adds one interface of the pair to a bridge connected to an output interface, ie:

jib addm TEST re0

will create interfaces e0a_TEST and e0b_TEST and add e0a_TEST to a bridge named re0bridge if it exists, or failing that, create such a bridge and connect it to re0. The jail will be configured to use nterface e0b_TEST.

The cherry on the cake with jib/jng : they try and keep MAC addresses persistent.

To create and destroy interfaces dynamically with ezjail, instead of tweaking /etc/rc.conf, we only need to add the following lines to the ezjail configuration file for the jail:

export jail_jailname_vnet_enable="YES" export jail_jailname_vnet_interface="e0b_jailname" export jail_jailname_exec_prestart0="/usr/local/sbin/jib addm jailname re0" export jail_jailname_exec_poststop0="/usr/local/sbin/jib destroy jailname"

Notes

Note that it is possible to directly set-up IP addresses on bridge0 bridge1 etc, which may save a couple of epair interfaces in the second and third examples. This is left as an exercise for the reader.

Also, it seems currently difficult or impossible to use VLAN interfaces (if_vlan) in a bridge configuration. I’m still digging on this subject.

References

I have found the following pages useful when preparing my setup and this post:

https://www.reddit.com/r/freebsd/comments/je9oxv/can_i_add_vnet_to_an_ezjail/

https://yom.iaelu.net/2019/03/freebsd-12-vnet-jail-using-bridge-epair-and-pf.html

https://www.cyberciti.biz/faq/how-to-configure-a-freebsd-jail-with-vnet-and-zfs/

Thanks to Jacques Foucry for his work on the nice graphics, Mat Arnold for pointing me to /usr/share/examples/jails and Éric Walter for the idea of the SVG WordPress plugin, avoiding the use of pixelated graphics 🙂